Automatic scaling

This chapter explains the logic behind our automatic scaling process.

For more information on how to enable the automatic scaling mode for a Fleet, please see the Automatic Deployment chapter.

For more information on how the deployment process works, please refer to the Deployment Process chapter.

The reasons for having a scaling mechanism

Before explaining how the scaling mechanism (the Scaler) works, let's take a minute to understand why a dynamic deployment system is important.

You will never run out of available game servers

If your game is higly successful, you can experience a sudden influx of players. Your basic game server capacity deployed onto your own bare metal servers may not be sufficient in that case. You could order more bare metal servers to increase capacity, but by the time we have delivered them to you, it may be too late to cater for the ingress of new players. This is where cloud scaling comes into play. To fill this gap in game server resources, we have created a dynamic game server deployment system that can scale onto any cloud platform. This has the result that you will never have a shortage of game servers. Even if a cloud provider is down entirely, there will still be other cloud providers that we can deploy game servers onto. We can pretty much guarantee game server availability this way.

Cost savings

Even though you could host your entire game in the cloud, we strongly advise using our bare metal servers as much as possible, or at least for your base requirements. Our bare metal servers simply perform better than cloud VMs. But not only that, renting a bare metal server for a month (or longer) is much cheaper than renting a similary sized VM for a month.

How the Scaler works (overview)

The Scaler combines the major elements described in this documentation in order to deploy game servers when and where they they are needed. The Scaler needs to know which application (builds) it needs to deploy (Application Management & Application Build Management) and where to deploy them (Deployment Configuration). Together with live game server status information (Allocation / Live Status) the Scaler knows the amount of occupied and free game servers and can then determine whether there are too few or too many free game servers and deploy or remove game servers as needed.

This in a nutshell is how the scaler operates.

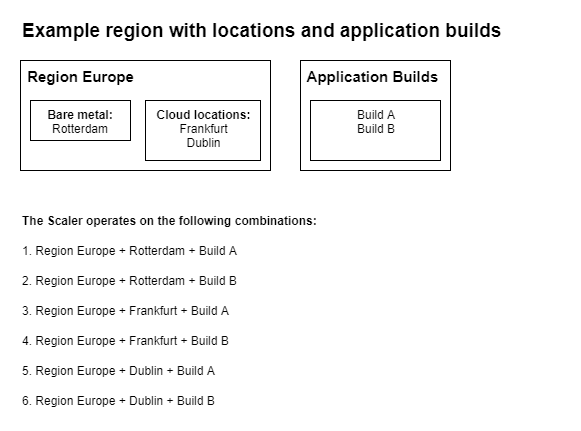

Deployment occurs per-region, per-location, per-ApplicationBuild

The scaling mechanism always operates on a combination of DeploymentRegion + DC location + ApplicationBuild. You can view each of these combinations as stand alone environments on which the scaling mechanism operates.

This means that the Scaler will iterate over all the regions in your DeploymentProfile, then within each region it iterates over all DC locations and then per location it iterates over all the ApplicationBuilds defined in the GameApplicationTemplate of your Deployment Configuration. Then for each ApplicationBuild it will do its checks to see whether the amount of ApplicationInstances is too low, too high or just right.

To summarize, the scaling mechanism always operates on individual combinations of region + DC location + ApplicationBuild. Deployment of game servers first occurs on bare metal servers and when they are full, the Scaler continues to deploy onto any configured cloud locations.

Host selection (round robin strategy)

When the scaling mechanism needs to deploy a new ApplicationInstance, it will first look for a usable bare metal host (BM). If you have run out of BM hosts, the Scaler will continue with cloud VMs, provided you have configured cloud locations in your DeploymentProfile.

A selected BM or VM host will be fully filled with instances before the mechanism will find the next free host. The number of instances that can be deployed onto a host is defined individually for each BM or VM instance type and is explained in the chapter "Maximum number of instances on a host".

Differences with host selection between using items in a containerLocation versus multiple containerLocations

When you define multiple items within a containerLocation they will be treated in a round-robin fashion. When you have multiple containerLocations they will be processed sequentially.

Here is a simple example:

- If you have a primary on Google Cloud and Azure, then the Scaler ensures that both are running an equal amount of Game Servers, which will always make sure that first a cloud is filled up completely.

If you have 8 core machines on Google Cloud and 4 core machines on Azure, and you request 16 Game Servers, then you will have the following combination:

- 1 Google Cloud and 2 Azure VMs: (8 + 4 + 4 = 16 Game Servers).

Example when using multiple containers

When you use multiple containers, the process works differently.

- Container A is set up the same: You have 2 Azure and 1 Google Cloud VMs

When Google Cloud doesn't have anymore capacity, then the following process occurs:

- The machine will switch over to Azure.

- Also, the secondary machine needs to be out of quota.

When you have a primary and secondary with Google and Azure, then the switch over will occur if you cannot spin up any more VMs within the primary. If you request for 16 games instances and Azure is out, then you get 2 Google instances.

If Google and Azure doesn't have anymore capacity, then the following process occurs:

- It will switch over to the secondary container.

- Within the secondary container (for example, if it contains Amazon and Tencent inside) then a round robin is performed again for those 2 containers until the primary has capacity again.

Initializing an empty host

When a new, empty host (BM or VM) has been selected for game server deployment, the scaling mechanism will first deploy all the utilities (sidecars) defined in your UtilityDeploymentTemplate. After that, the new game server(s) are deployed.

Removing instances (downscaling)

If at any moment in time too many application instances are deployed, the scaling mechanism will start removing unused instances until the number of free / unallocated instances equals the bufferValue again.

If you have application instances deployed onto VMs, we will remove those first. Only empty instances are removed, of course. If no VMs are in use, or if there are no VMs with free / unallocated instances, the Scaler will continue downscaling instances on bare metal hosts.

The host with the least amount of application instances (or secondary, with the most free instances) will be selected as the host to start removing instances from. This attempts to help with freeing up VMs so we can destroy them, reducing cloud hosting cost as much as possible.

Keep in mind though that we do not control (gaming) clients already playing on a game server deployed onto a VM. That means that if your game server hosts matches that do not necessarily end, or take a long time, a VM cannot be destroyed until all those players have left the game server. If your game falls into that category you may want to invest in a mechanism that can transfer players to another game server, in an attempt to reduce cloud hosting costs.

Cleaning up a host when it's empty

After the last game server instance has been removed from a host, any side cars (utilities) will also be removed. If the host concerns a VM, the VM will be destroyed. A bare metal host will become usable again for deployments (for the same or another environment / fleet / any other purpose).